Second blog post in a series of 3. Read part 1 and 3 (coming soon).

In my previous blog we introduced the principle concept of decentralised governance, where no single person, entity or organisation can control the direction of change in a public Network.

Any change is directed by the democratic votes of an ever-growing, diverse collective. This means that no single Node Operator or User, nor the collective of Users on the Network, can be held directly liable. Such a Network where accountability is diluted is known as being sufficiently decentralised.

The concept of Entropy helps to transparently and effectively achieve sufficient decentralisation, through decentralised governance.

Read part 1 here, featuring our super funky Entropy sandcastle.

The genesis event

Like a perfectly formed sandcastle with Low Entropy, any decentralised ecosystem must begin with a structure, set of rules, parameters and default settings which govern the scope, boundaries and objectives of the Network — usually set by a small group of individuals.

For this reason every decentralised Network begins with a Low Entropy genesis event from which point Entropy increases.

This can be thought of like a Big Bang, starting a new system with a set of fundamental rules and boundaries.

Even the most high profile blockchains such as Bitcoin began with a notorious ‘genesis block’. Also known as Block 0, the Bitcoin genesis block was the first block created on the Bitcoin ledger, and every single Bitcoin block can have its lineage and ancestry traced back to it.

Interestingly, the next block, known as Block 1, wasn’t mined until six days after the Genesis Block. This is considered as a very strange occurrence for the protocol, since the general time gap between blocks is intended to be ~10 minutes.

Many have questioned why Satoshi Nakamoto, the infamous pseudonymous creator of Bitcoin, caused the delay. The most likely theory is that Nakamoto spent the first 6 days testing the protocol and its stability, before backdating the timestamp.

Others believe that Nakamoto wanted to play God and recreate the story of the world being created in six days… Just like the Big Bang, Nakamoto had to create a set of default settings and parameters for Bitcoin to develop autonomously.

The point of this elaboration, however, is to highlight that for every Network that eventually reaches a point of sufficient decentralisation and High Entropy, there is a Big Bang event, and a period of tinkering and adjustment, as Low Entropy begins to increase.

And this is where we start at cheqd.

cheqd are defining a set of baseline conditions and rules for the cheqd Network, using Cosmos’ inbuilt decentralised governance capabilities.

And we want to clearly lay out how the Network will enable a smooth transition from a Low Entropy genesis event to a High Entropy, sufficiently decentralised structure.

We will be publishing these default parameters and our roadmap for this journey in our Governance Framework, appearing on our GitHub shortly, stay tuned!

How do we calculate Entropy?

Entropy is a composite function of a number of different factors, consisting of the number of validators, the number of Users with staked tokens, the number of accepted proposals, the diversity of validators and capacity for a Network overhaul by a proportion of the Network validators.

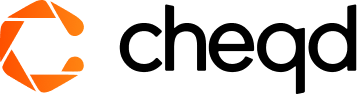

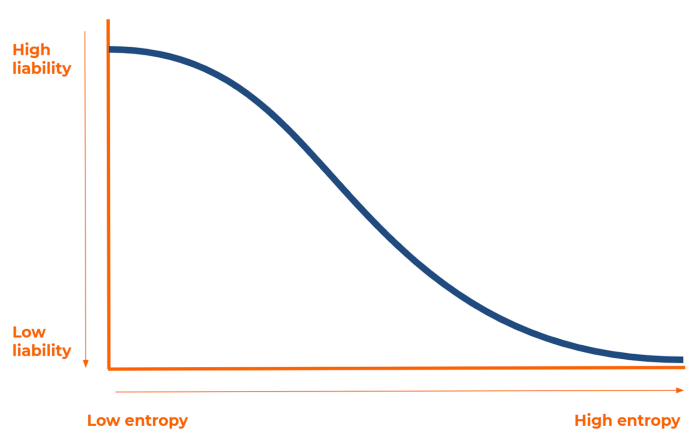

At a high level, without calculating Entropy mathematically, it can be visualised as having an inverse relationship with liability. At the genesis state, the liability on those with control over the Network will be relatively high. This will quickly tend downwards as the Network expands and decision-making is disseminated among a collective.

Given the legal grey area around the point of sufficient decentralisation, we are setting a high threshold of decentralisation which we want to achieve.

On a visual level, this can be shown as follows.

Through such delineation, we believe that we are positioning ourselves sensibly for any updated legal opinion on the topic.

Future proofing cheqd

As explained in part 1, Entropy is such an important concept in this context because it ties directly into liability and to the legal classification of tokens.

As seen with Ripple, it is possible for Networks to be partially decentralised but still retain a controlling stake or pulling power in the Network.

By introducing Entropy, we are removing the surface area for Regulators to scrutinise cheqd in the same way as Ripple.

Future proofing cheqd as a Network and a utility.

In turn, we hope that this gives greater confidence to our Node Operators, token holders and community in the strength of the token.

Greater clarity in a grey area

To date, the concept of Entropy is something which has been spoken about in roundabout ways across legal, journalistic and academic mediums in the context of decentralised governance.

Coining the term and the parameters which play into the increase of Entropy will be something that will help decentralised governance architectures achieve legal clarity going forward.

Of course, our definition of Entropy does not have legal standing or significance, but we hope it can be used as a tool or reference point by regulators or at least a sensible classification model.

In the final blog post in this series, Part 3, we will show how we score and calculate Entropy, and therefore, how cheqd will need to develop to achieve sufficient decentralisation.

In the meantime, join our rapidly growing Telegram community to stay updated with our most recent news and insights.

P.S. We’re also on Twitter and LinkedIn, make sure to cheq in!